Agent development using prebuilt components

LangGraph provides both low-level primitives and high-level prebuilt components for building agent-based applications. This section focuses on the prebuilt, ready-to-use components designed to help you construct agentic systems quickly and reliably—without the need to implement orchestration, memory, or human feedback handling from scratch.

Initialize an LLM

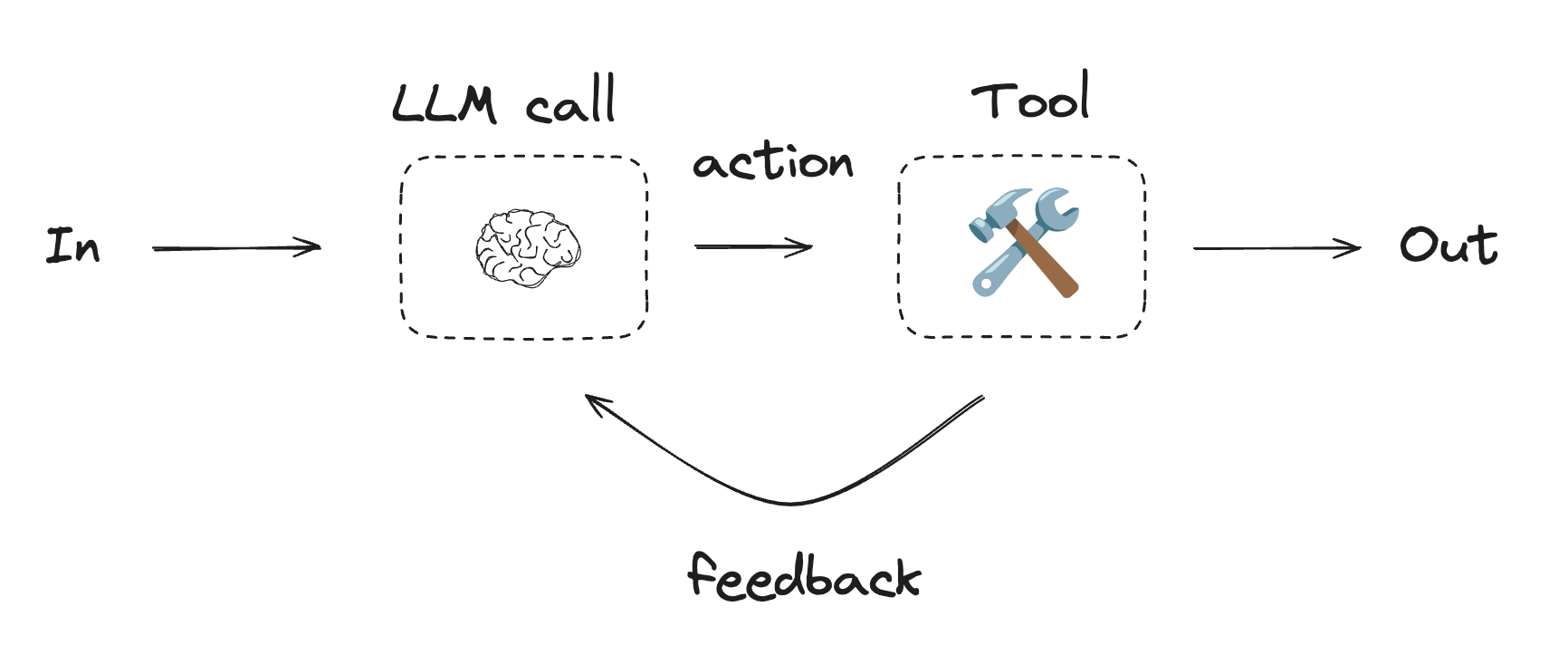

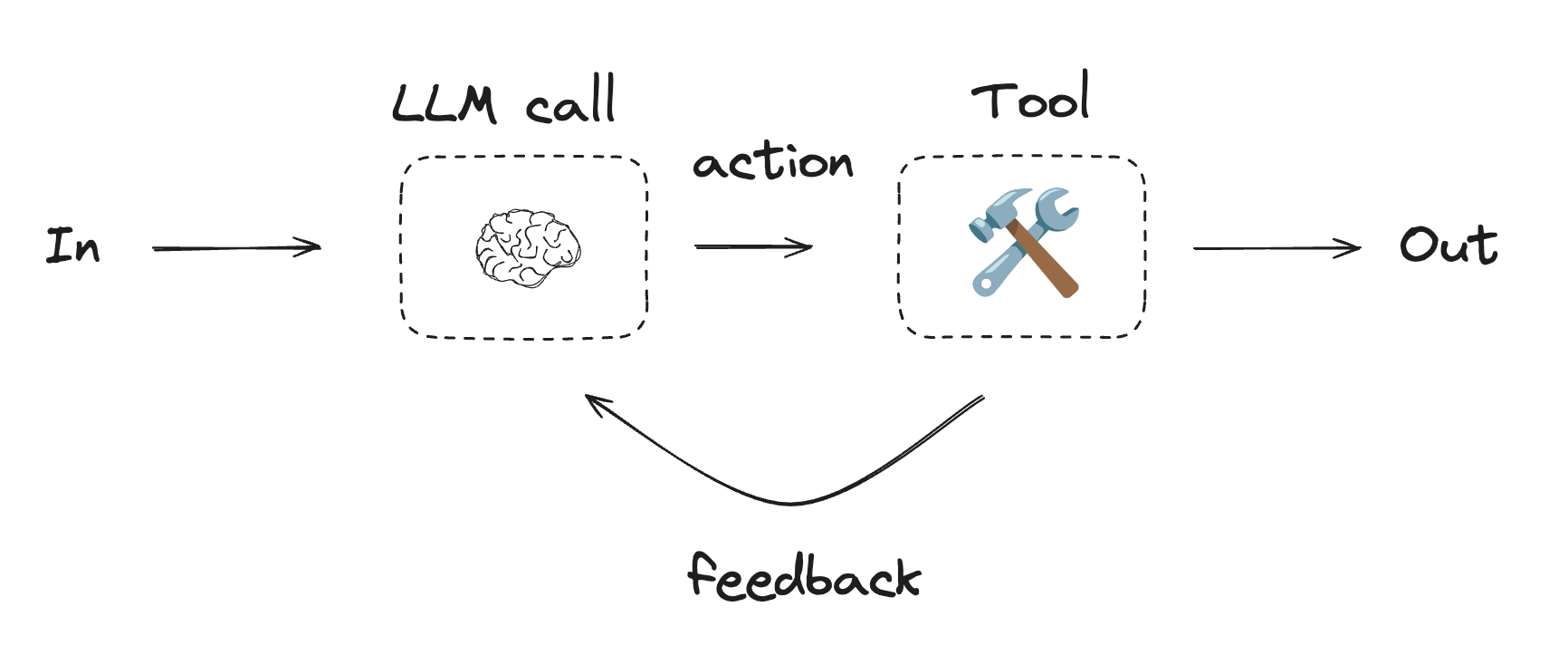

What is an agent?

An agent consists of three components: a large language model (LLM), a set of tools it can use, and a prompt that provides instructions. The LLM operates in a loop. In each iteration, it selects a tool to invoke, provides input, receives the result (an observation), and uses that observation to inform the next action. The loop continues until a stopping condition is met — typically when the agent has gathered enough information to respond to the user.

Key features

LangGraph includes several capabilities essential for building robust, production-ready agentic systems:- Memory integration: Native support for short-term (session-based) and long-term (persistent across sessions) memory, enabling stateful behaviors in chatbots and assistants.

- Human-in-the-loop control: Execution can pause indefinitely to await human feedback—unlike websocket-based solutions limited to real-time interaction. This enables asynchronous approval, correction, or intervention at any point in the workflow.

- Streaming support: Real-time streaming of agent state, model tokens, tool outputs, or combined streams.

- Deployment tooling: Includes infrastructure-free deployment tools. LangGraph Platform supports testing, debugging, and deployment.

- Studio: A visual IDE for inspecting and debugging workflows.

- Supports multiple deployment options for production.

Package ecosystem

The high-level, prebuilt components are organized into several packages, each with a specific focus:| Package | Description | Installation |

|---|---|---|

langgraph | Prebuilt components to create agents | npm install @langchain/langgraph @langchain/core |

langgraph-supervisor | Tools for building supervisor agents | npm install @langchain/langgraph-supervisor |

langgraph-swarm | Tools for building a swarm multi-agent system | npm install @langchain/langgraph-swarm |

langchain-mcp-adapters | Interfaces to MCP servers for tool and resource integration | npm install @langchain/mcp-adapters |

agentevals | Utilities to evaluate agent performance | npm install agentevals |