4. Add human-in-the-loop controls

Agents can be unreliable and may need human input to successfully accomplish tasks. Similarly, for some actions, you may want to require human approval before running to ensure that everything is running as intended.

LangGraph’s persistence layer supports human-in-the-loop workflows, allowing execution to pause and resume based on user feedback. The primary interface to this functionality is the

1. Add the

Starting with the existing code from the Add memory to the chatbot tutorial, add the

We can now incorporate it into our

The chatbot generated a tool call, but then execution has been interrupted. If you inspect the graph state, you see that it stopped at the tools node:

The chatbot generated a tool call, but then execution has been interrupted. If you inspect the graph state, you see that it stopped at the tools node:

interrupt function. Calling interrupt inside a node will pause execution. Execution can be resumed, together with new input from a human, by passing in a Command.

interrupt is ergonomically similar to Node.js’s built-in readline.question() function, with some caveats.

interrupt is ergonomically similar to Node.js’s built-in readline.question() function, with some caveats.

This tutorial builds on Add memory.

1. Add the human_assistance tool

Starting with the existing code from the Add memory to the chatbot tutorial, add the human_assistance tool to the chatbot. This tool uses interrupt to receive information from a human.

Let’s first select a chat model:

StateGraph with an additional tool:

For more information and examples of human-in-the-loop workflows, see Human-in-the-loop.

2. Compile the graph

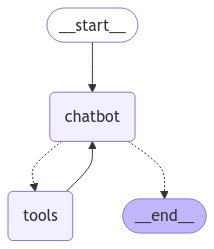

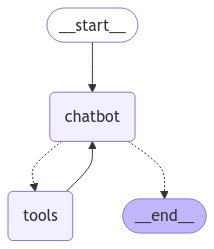

We compile the graph with a checkpointer, as before:3. Visualize the graph

Visualizing the graph, you get the same layout as before – just with the added tool!

4. Prompt the chatbot

Now, prompt the chatbot with a question that will engage the newhuman_assistance tool:

Additional informationTake a closer look at the Take a closer look at the Calling The input has been received and processed as a tool message. Review this call’s LangSmith trace to see the exact work that was done in the above call. Notice that the state is loaded in the first step so that our chatbot can continue where it left off.Congratulations! You’ve used an

humanAssistance tool:humanAssistance tool:interrupt inside the tool will pause execution. Progress is persisted based on the checkpointer; so if it is persisting with Postgres, it can resume at any time as long as the database is alive. In this example, it is persisting with the in-memory checkpointer and can resume any time if the JavaScript runtime is running.5. Resume execution

To resume execution, pass aCommand object containing data expected by the tool. The format of this data can be customized based on needs.For this example, use an object with a key "data":interrupt to add human-in-the-loop execution to your chatbot, allowing for human oversight and intervention when needed. This opens up the potential UIs you can create with your AI systems. Since you have already added a checkpointer, as long as the underlying persistence layer is running, the graph can be paused indefinitely and resumed at any time as if nothing had happened.Check out the code snippet below to review the graph from this tutorial: